Hardware Setup#

Machine Requirements#

In general, we will be placing every instance of a RonDB node type on a different machine / VM. Technically, the same resource requirements per node type apply as in the Local Quickstart Tutorial. However, a more reasonable minimal cluster setup would look more like the following:

-

Machine per Management Server: 1GiB memory, 1 CPU, 10GiB storage

-

Machine per Data Node: 8GiB memory, 4 CPU, 256GiB storage

-

Machine per MySQL Server: 2GiB memory, 8 CPU, 10GiB storage

Both data node memory and storage are highly dependent on the size of the expected data, so this is for the user to decide. It is important to estimate beforehand what proportion of the data will be stored in memory and what proportion will be stored on disk, using disk-based columns.

In this tutorial, we will be running with the following number of machines:

-

Management Servers: 2 (minimum 1)

-

Data Nodes: 4 (2 node groups x 2 replicas, 1 x 1 is also possible)

-

MySQL Servers: 2 (minimum 1)

Every machine running RonDB should have/be:

-

Linux installed (tested on Ubuntu 20.04, 22.04 & Red Hat-compatible 8+)

-

RonDB installed (22.10 recommended)

-

Network access to each other

-

In the same cloud region

Availability Zones#

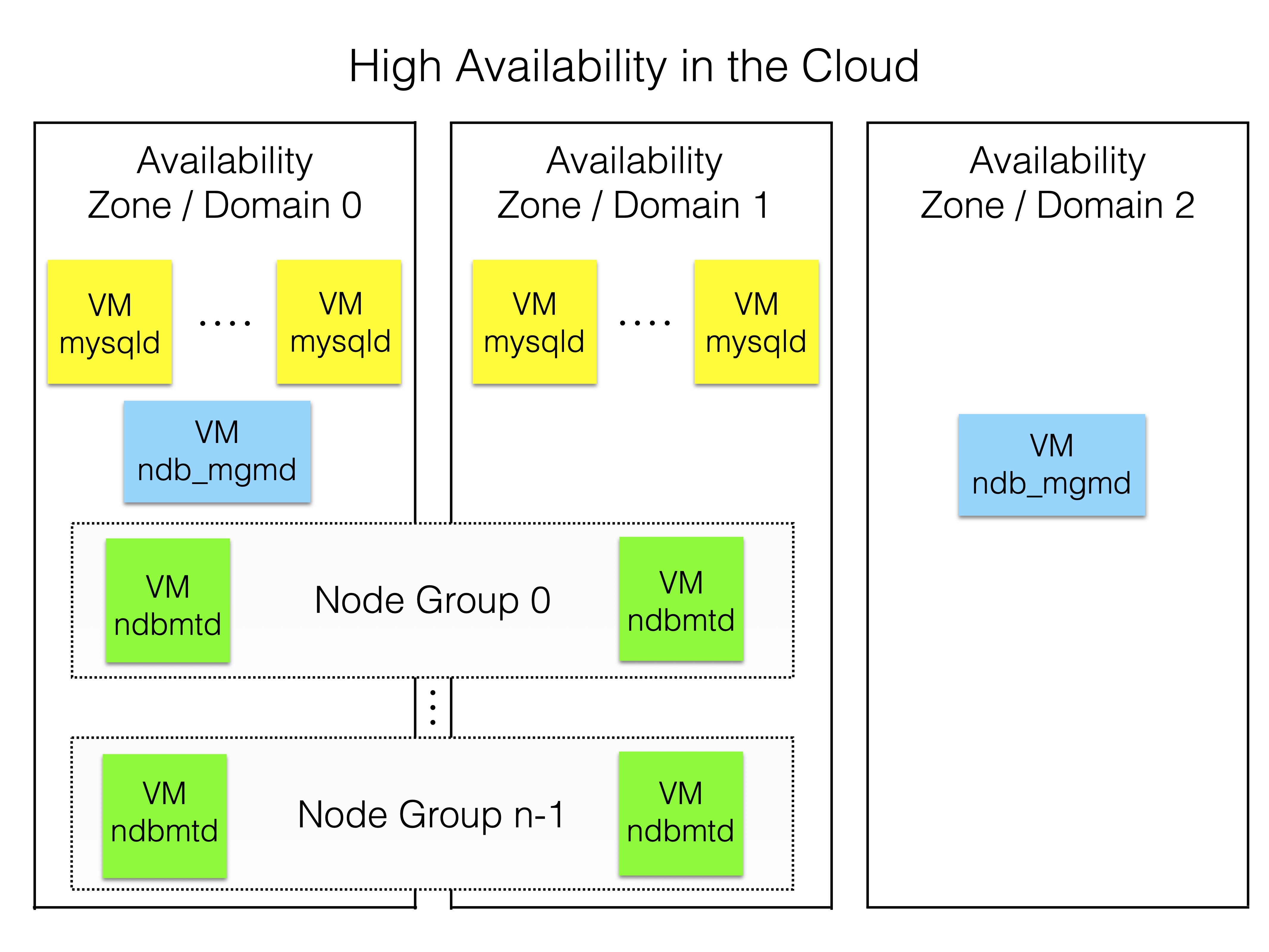

In cloud environments, utilizing multiple Availability Zones (AZs) is recommended for high availability. Note that AZs are named differently across cloud providers and offer varying levels of granularity.

An optimal setup is illustrated in the figure below:

Ideally, each node group should place each replica in a separate AZ. MySQL or other API servers can be distributed amongst these AZs. The first management server should however be placed in its own AZ.

Management servers typically serve as arbitrators during node failures or network partitions. When an AZ fails, the data nodes in the remaining AZs consult the arbitrator on continuing to run or not. If they cannot reach the arbitrator, these nodes will fail. Consequently, placing the arbitrator in the same AZ as a set of data nodes significantly increases the risk of cluster failure due to AZ failure — in our example, a 50% chance.

Regardless of the arbitrator’s placement relative to data nodes, the cluster can withstand network partitions between data node AZs. This is because data nodes in the same AZ as the arbitrator will maintain contact with it, ensuring continued operation.

For further details on RonDB’s use of node groups and arbitration for high availability, refer to the Resiliency Model.

Local NVMe drives vs. Block Storage#

In a cloud setting, local NVMe drives have various pros and cons in comparison to network drives (block storage). Generally, NVMe drives are considerably cheaper, a lot faster (no network latency) and have higher bandwidth. However, they are also less durable and will not survive a VM failure. They may also take more expertise to set up.

Regarding RonDB however, there are some more specific pros and cons.

Advantages NVMe drives:

-

High performance for reads/writes of disk column data

-

Possibility of parallel disk column reads with multiple NVMe drives

-

High performance for REDO log writes & local checkpointing

-

Normal node restarts are very fast

-

Possibility of formatting multiple drives differently depending on usage

Advantages Block storage:

- No initial node restarts when exchanging data node VMs (less load on system)

NVMe drives are therefore recommended for performance and cost reasons. Block storage is on the other hand recommended for faster recovery and reliability reasons.