Global replication - Tutorial introduction#

The following tutorials will be a deployment-agnostic guide to setting up global replication with RonDB. The tutorials should be followed in order - however, each tutorial will create a setup that is valuable standalone. The further the tutorials progress, the more complex the setup will become.

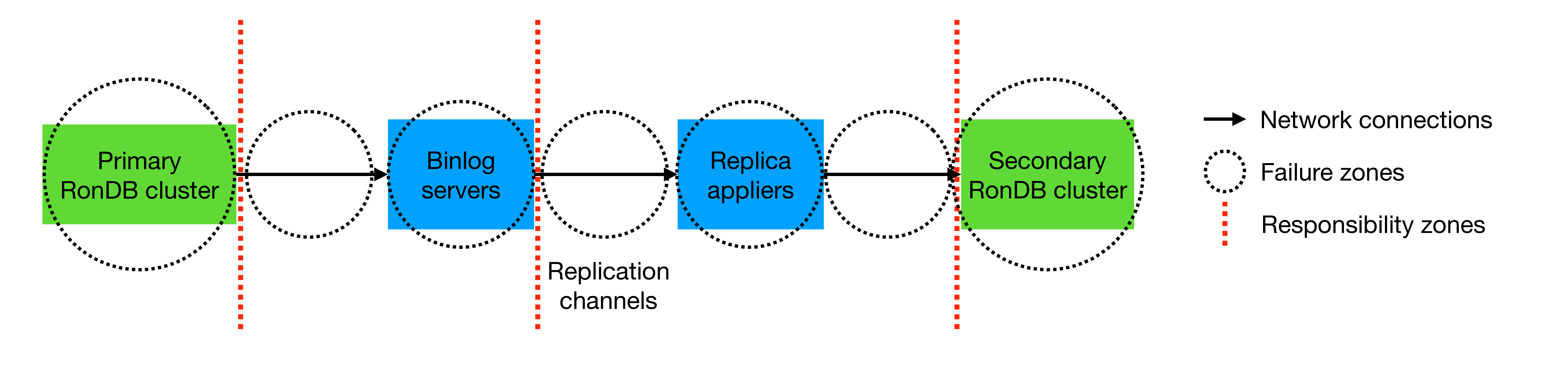

Failure and responsibility zones#

The tutorials attempt to divide the global system into responsibility and failure zones. In an active-passive replication setup, an interpretation of this could be described as follows:

Each of the responsibility zones can be handled by single logical entities to create self-managed systems. These may have an API to interact with and have to be aware of the failure zones they contain. To avoid failing, they can monitor, scale, restart and load balance entities within.

In the first few tutorials, we will be focusing on one responsibility zone at a time. We do not count setting up RonDB clusters to this, since this is explained in other tutorials (Local quickstart and Operating a cluster).

Global actions#

As we progress through the tutorials, we will however run into the necessity of performing cross-zone actions. Apart from initial system startup, this happens when one or more responsibility zones fail.

To give an example: If the responsibility zone of the binlog servers fails, the following global fixes need to be made:

-

Restart binlog servers from scratch (existing binlogs are now useless)

-

Create a backup in the primary cluster

-

Restart the secondary cluster from the backup

-

Recalibrate the replica appliers to check the backup’s epoch

Generally, this gives a hint that it can make sense to have a detached entity that owns the responsibility zone of the entire system. Workflows such as these will then be described in the last tutorials.